Read Time:

5

Minutes

Innovation @ Ntegra

January 19, 2026

Technical Perspective on Artificial Intelligence within DevOps

The working environment is constantly evolving, placing individuals and organisations under continuous pressure to adapt to new practices, processes and constraints. This change has greatly accelerated recently with the rise and widespread adoption of AI tools like Chat-GPT. AI's sudden prominence was a major feature of the products and presentations at Ntegra's Spring Silicon Valley Tech Summit this year.

AI technology has been developing for decades, but recent advances in computing power and data collection have rapidly boosted its adoption. AI now supports professionals and hobbyists alike in diverse tasks: from copywriting and coding to ideation and decision-making. This was apparent at the recent SVTS, where numerous products showcased the use of AI and machine learning tools and integrations.

Growing Popularity and Adoption of AI

The most notable example of this adoption is OpenAI's ChatGPT, which has attracted over 100 million users in its first two months and boasts an estimated 13 million daily visitors. It, along with similar tools, combines the output of a large language model (LLM) with the conversational format of a chatbot, providing an engaging, human-like interface for questions and answers. Users are empowered to ask follow-up questions, encouraging them to collaborate with AI as they explore topics more deeply, enhancing understanding and potentially fostering greater creativity.

From our current perspective, the possibilities appear endless. For instance, with recent advances in speech recognition, it will be intriguing to see if there is a greater shift towards vocal AI using a conversational format soon, especially considering the anticipated rise of augmented reality (AR) technology.

The Integration of AI in Development and DevOps

Ntegra developers have already benefited from tools like GitHub Copilot, an AI-powered pair programmer designed to assist with code suggestions integrated directly into their workflow. OpenAI has also created a vocal AI format for Copilot, called GitHub Copilot Voice, which allows developers to describe their code using spoken natural language, rather than relying on their keyboard. This is particularly useful for accessibility scenarios.

A frequent question is whether tools like these will replace developers. While they are designed to enhance developers' existing skills, developers still need to understand the code and make logical decisions for specific use cases. This concept is known as 'augmented intelligence', which means using AI to improve human intelligence. It can be likened to using a car to travel to another city; you could walk, but now you have a tool that, if steered correctly, will get you there more quickly.

Recent techniques like Chain-of-Thought (CoT) prompting have helped models deliver more accurate responses to complex problems that need logical thinking, reasoning and multiple steps to solve. Models can comprehend problems and resolve them step-by-step, not only providing accurate answers but also transparently showing users the process used to arrive at a solution.

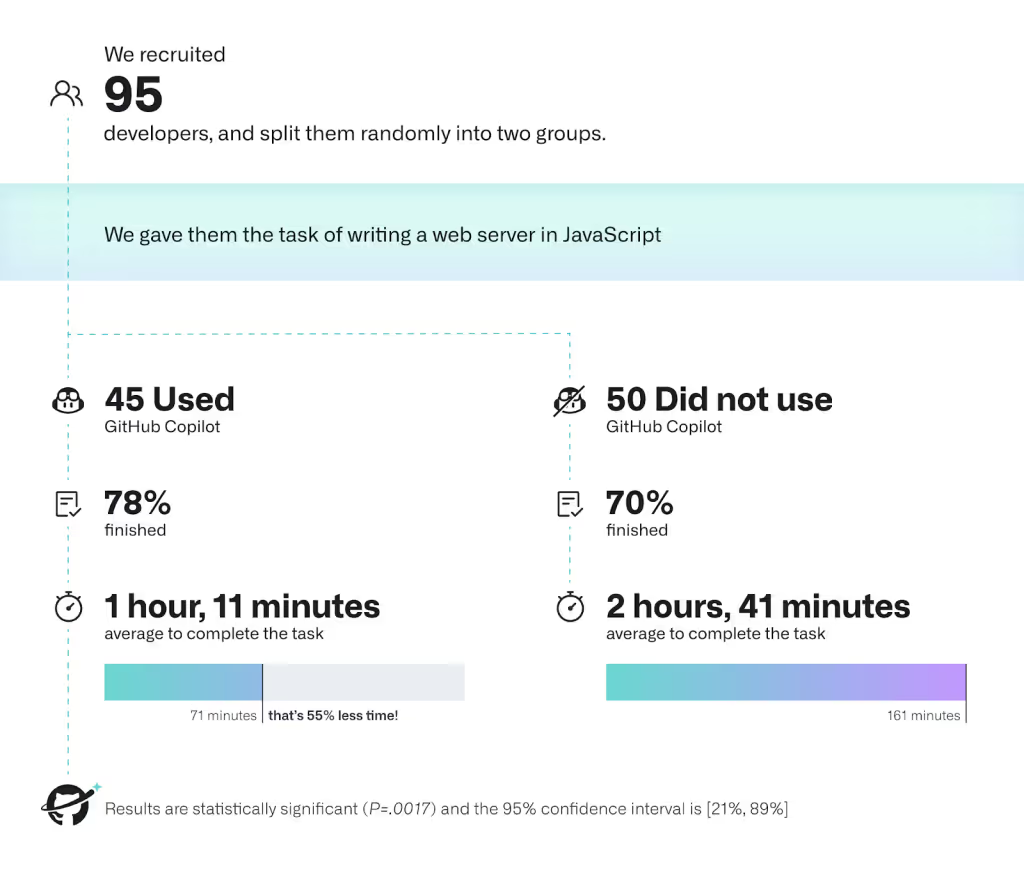

After GitHub Copilot's public release, GitHub conducted research to measure its impact on developer productivity. They found that the group using Copilot had a higher completion rate and finished tasks 55% faster than those who didn't use it. Other notable improvements recorded include increased code quality and reduced bugs, which are often caused by typing errors or incorrect syntax. Developers reported enhanced productivity and job satisfaction, as Copilot allowed them to conserve mental energy. In summary, the implementation of this AI-powered tool enabled developers to write code more efficiently, accurately and quickly.

AI can also speed up deployments by automating and configuring DevOps workflows. Beyond assessing code for bugs, AI tools can identify potential risks and vulnerabilities and then monitor deployments for compliance and real-time anomaly detection. These tools are trained to learn how the code should run, which enables them to provide a robust threat response. By integrating AI within the DevSecOps workflow, risks can be identified and addressed early on, significantly reducing the likelihood of missed security threats that could be overlooked by human analysts.

Ethical implications of AI

The use of AI raises several crucial ethical concerns. Organisations need to be aware that large language models (LLMs) can carry biases, as they depend on the training data they are provided. Typically, these models are closed source and considered a "black box", owned and operated by an external organisation. Reasons for them remaining this way may include protecting intellectual property, ensuring compliance and data security and maintaining control over built-in safety features. As a result, users must generally place trust in the organisation behind the model and this should be considered when evaluating the responses received.

Transparency in data collection for training models is also a contentious issue that organisations are addressing when developing LLMs. Amazon CodeWhisperer, a code suggestion tool, has built-in reference tracking. If it recognises a code suggestion resembling its training data, CodeWhisperer tries to provide the associated source and licensing information.

Furthermore, AI tools have faced criticism for inaccuracy issues and providing users with false or made-up information, known as machine learning hallucinations. These inaccuracies can be problematic, as users might unquestioningly accept the confidently presented responses, potentially affecting their subsequent usage.

It will be interesting to observe how AI ethics evolve, addressing accuracy, bias and privacy issues to enhance trust in AI-based products. Data provenance tools could be employed to improve accuracy and attribution, building on the identification of data sources. Additionally, using consensus across multiple LLMs might increase confidence and trust in responses to a problem.

Preparing for the Future with AI

Despite potential risks, AI will looks set to play a larger role in our lives. Disruptive technologies often face scepticism and criticism, but it is essential to embrace the potential changes. By recognising the upside of AI and understanding its usage and direction, we can position ourselves to harness the benefits it promises.

Investing time in learning about AI's capabilities and its impact on your organisation is a good idea. Efforts should focus on identifying opportunities and vulnerabilities. Could AI improve current processes or identify something that needs to be addressed before a potential AI tool disrupts it? How can AI improve your current workforce and help them work more efficiently?

Organisations should consider leveraging their existing data. Valuable and insightful information is often collected and available but goes unused. AI tools can help improve processes, automate repetitive tasks and uncover new insights using this data. Nevertheless, it is important that appropriate emphasis is placed on identifying any ethical or legal considerations.

When dealing with emerging technologies, organisations must develop a strategy to assess the technology, plan for integration and determine its future use to enhance operations and maintain competitiveness in the market.

Conclusion

AI is increasingly being integrated into our daily lives, affecting various aspects of technology and transforming the way we work. It is crucial to be aware of AI's capabilities and understand how to leverage them, adapting our work to any potential opportunities and risks. As developments occur at a rapid pace, we can expect numerous changes and challenges from AI in the coming years.

Sean Bailey,

Senior Developer at Ntegra

*Disclaimer* The recent copyright and privacy lawsuits against OpenAI was not yet public knowledge when writing this article.

If this resonated, explore Ntegra’s Knowledge Hub for deeper strategy insights, market trends and pragmatic thought leadership.

Ntegra's 5 Key Principles for a Successful Intranet

Autumn Tech Summit 2021 Highlights

Ntegra Coffee Club – February 2022

Andreessen Horowitz - London Roadshow

Product Led Growth is the De Facto Strategy and Operating Model

Venture Capital and Corporate Innovation - A Point of View From Mayfield Fund

Ntegra's Journey with AI at the Northumbrian Water Innovation Festival 2023

Navigating the Adoption Process of Technology Innovation: The S-Curve Model as a Strategic Tool